Overview

This page provides supporting material for the hands-on session of the CloudCom 2014 Tutorial: Programming Elasticity in the Cloud.

Elasticity is seen as one of the main characteristics of Cloud Computing today. But elasticity is often viewed as scaling in/out computational resources, which basically means resource elasticity. In fact, elasticity is a multi-dimensional perspective in which elasticity can be based on resource, quality and cost/benefit dimensions, and each of them can be further divided into several subdimensions.

In this tutorial we will present the concept of multi-dimensional elasticity, the main principles of elasticity and how these principles play a role in the development and integration of software services, people, and things into native cloud applications/systems, which can be modeled, programmed, and deployed on a large scale in multiples of cloud infrastructures.

The hands-on session of the tutorial focuses on programming elasticity by using our COMOT toolset. To reduce complexity, COMOT will be deployed tutorial on a personal laptop / single virtual machine, and its features highlighted on an elastic service running on a small Docker-based "private cloud". We further provide an example COMOT elastic cloud service description tailored for an environment with reduced resources.

The tutorial will cover the following steps:

Note: Deploying COMOT will download about 800 MB - 1GB of data, containing the COMOT platform, and used docker image. It is recommended to do this step over a good internet connection.

Requirements

COMOT is implemented as a service oriented distributed platform, in which multiple standalone services interact to provide complete functionality, from describing, to monitoring and controlling elastic services

Resource requirements

To increase usability, we provide two deployment configurations for COMOT:

- Distributed deployment:

- Each service runs isolated in its own web container

- Provides high performance, but at high memory usage

- Minimum 4GB RAM, Recommended 6

- Compact deployment:

- All services except elasticity control are deployed in the same web container

- Significantly less memory usage, but at somewhat reduced performance.

- Minimum 3GB RAM, Recommended 4

Software requirements

The tutorial was tested and tailored for Ubuntu 14.04 and Ubuntu 14.10

Elastic Cloud Service

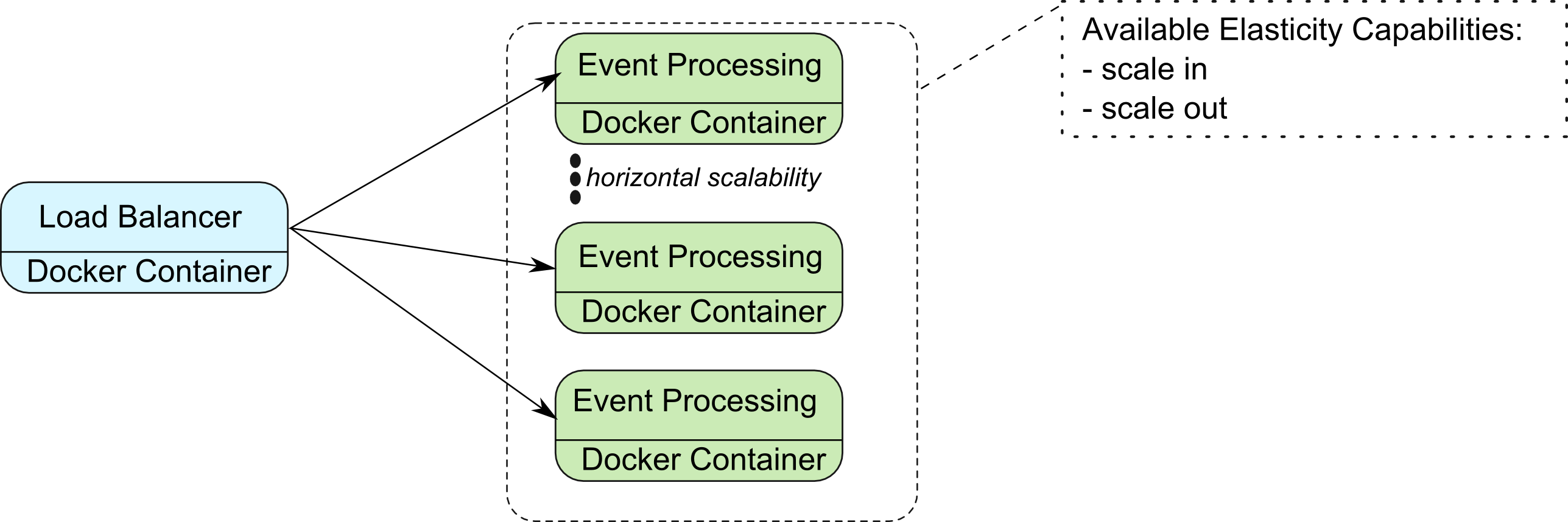

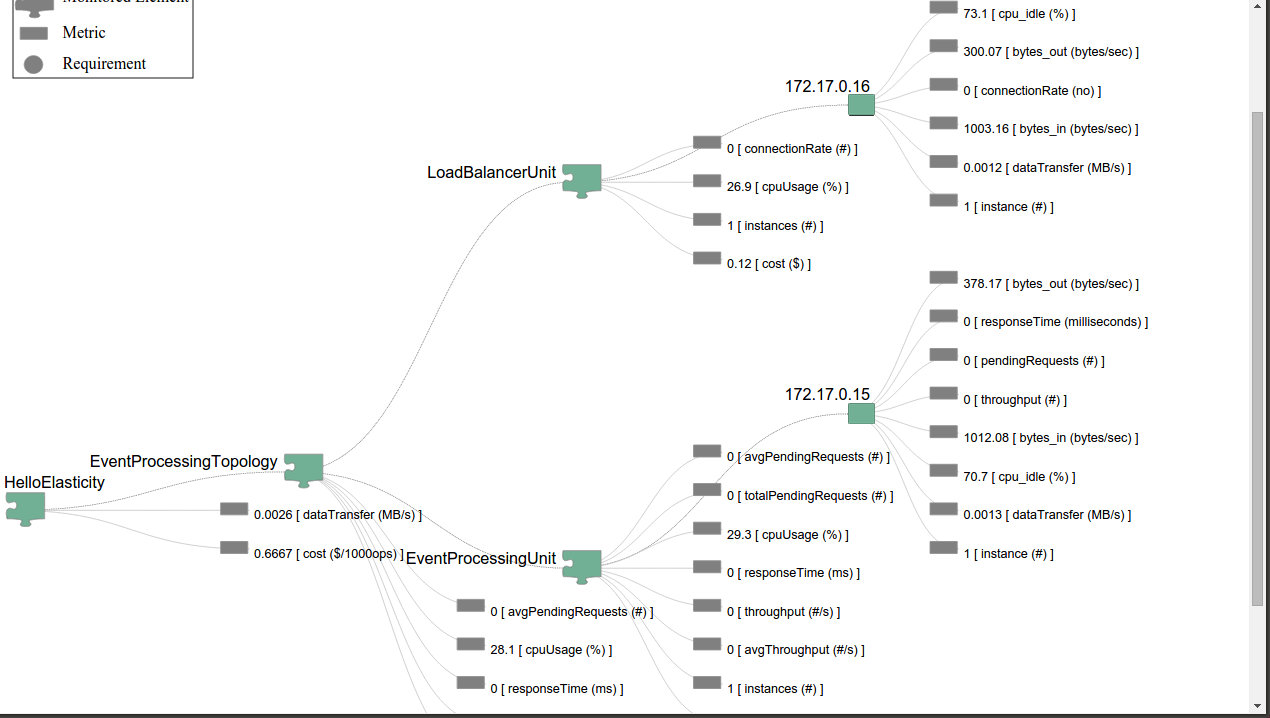

The pilot service for this tutorial contains two units: (i) a fixed load balancer unit, and (ii) an elastic event processing unit, as depicted in the figure below. After deploying COMOT, this pilot service will be described, deployed, monitored, and its elasticity is analyzed and controlled (as we see in the Tutorial section).

The load balancer enables the scaling in/out of the event processing. We measure and analyze with MELA the response time and throughput as application-level metrics, based on which we can specify SYBL elasticity requirements.

Deploying COMOT

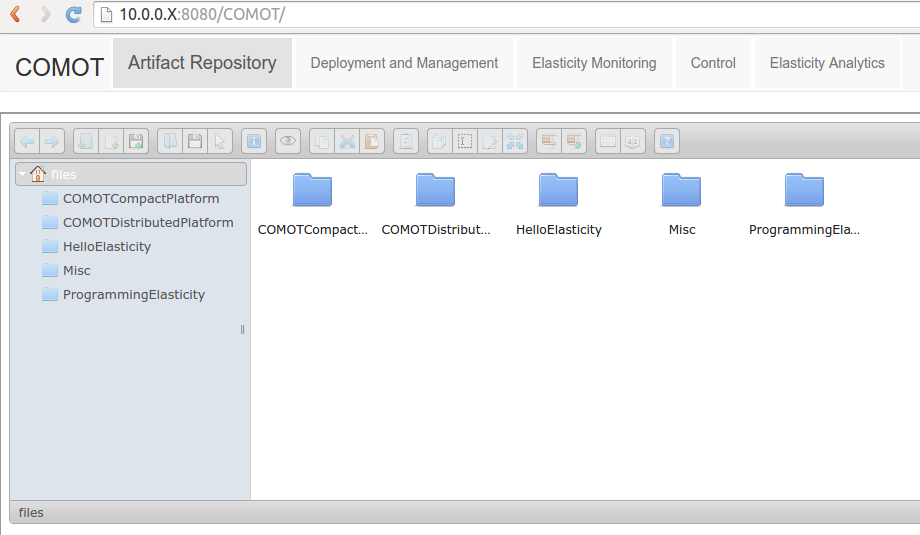

Built and ready to use versions of COMOT are available in our Software Repository. The repository also contains the software artifacts for the elastic service used in the tutorial.

We provide separate install and uninstall scripts for both compact or distributed COMOT deployments. Depending on the desired platform, please use the appropriate scripts.

Note 1: Deploying COMOT will download about 800 MB - 1GB of data, containing the COMOT platform, and used docker image.

Note 2: While the steps below are exemplified for the COMOT compact deployment, the same approach applies to the distributed deployment version

For deploying COMOT follow the next steps:

$ mkdir Tutorial

$ cd Tutorial

$ wget http://128.130.172.215/repository/files/COMOTCompactPlatform/installCOMOT.sh

$ bash installCOMOT.shA prompt will appear and ask for a publicly accessible IP of the machine on which COMOT is deployed to be specified. This is used to correctly configure the web-based COMOT Dashboard.

Is this a virtual machine/container?

Please select 1(No) if this is the local machine, 2(Yes) if this is a separate machine, 3(Quit)

1) Yes

2) No

3) Quit

$? 1 [please enter 1 if the tutorial is run on local machine]

$? 2 [please enter 2 if the machine is a remote one, i.e., not accessible as "localhost"]

Please enter a PUBLICLY ACCESSIBLE IP for this machine to setup COMOT Dashboard accordingly

10.0.0.X [if 2 was selected, please enter instead of "10.0.0.X" the public IP of the machine]

...

[ installation progress will be reported ]

...

Waiting for COMOT Dashboard to start

COMOT deployed. Please access COMOT Dashboard at http://10.0.0.X:8080/COMOTFor uninstalling COMOT, please run the uninstallation script for the respective deployment

# wget http://128.130.172.215/repository/files/COMOTCompactPlatform/uninstallCOMOT.sh

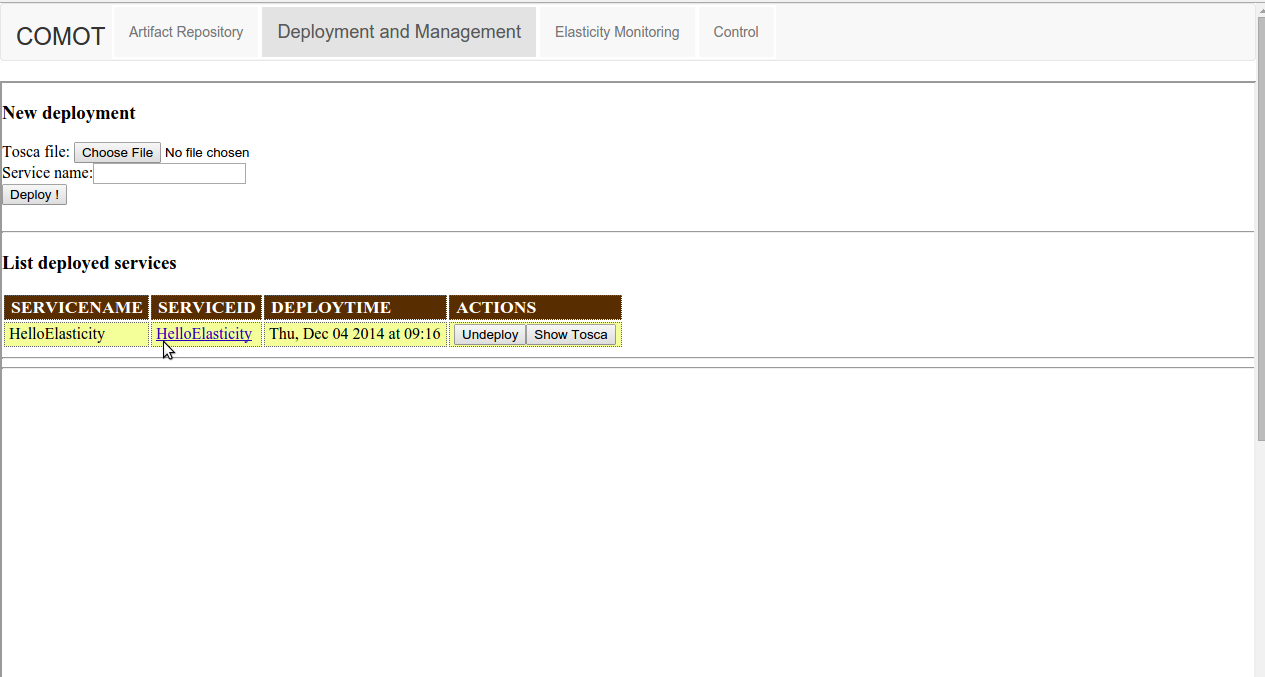

# bash ./uninstallCOMOT.shIf deployment is successful, the COMOT Dashboard should be accessible as below:

Tutorial: Programming Elasticity in the Cloud

1. Program elasticity from a Java developer's perspective

We provide a Java-based description language/api for describing elastic cloud services, from their software artifacts, to elasticity capabilities and requirements.

We prepared a Java Maven project containing the description of the pilot elastic service, ready to be deployed and controlled

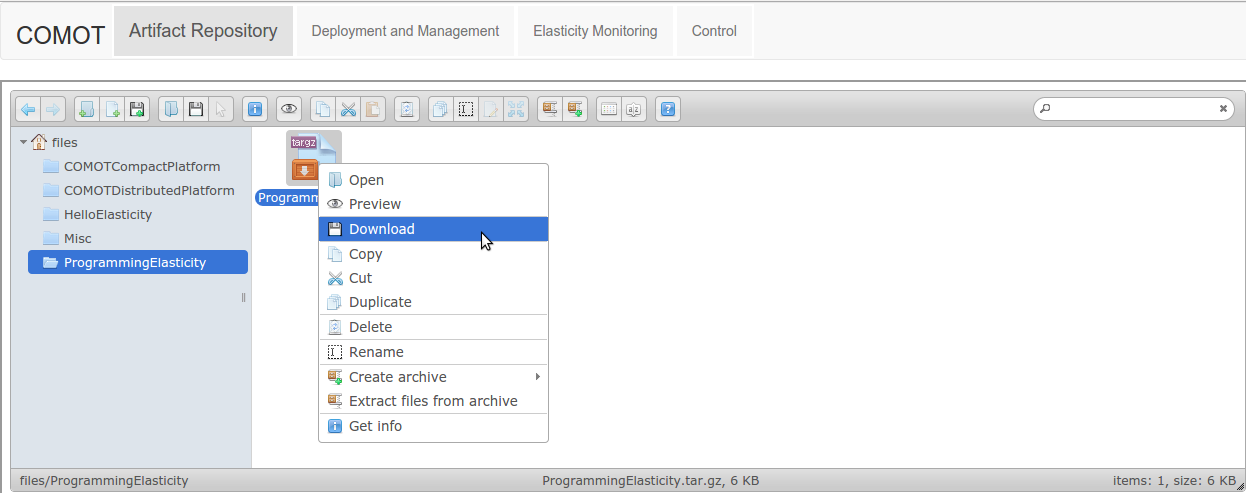

a. Download programming elasticity example project

From the COMOT Dashboard "http://10.x.x.x:8080/COMOT", choose from Artifact Repository, the Programming Elasticity folder, and download and extract the JAVA Maven project ProgrammingElasticity.tar.gz

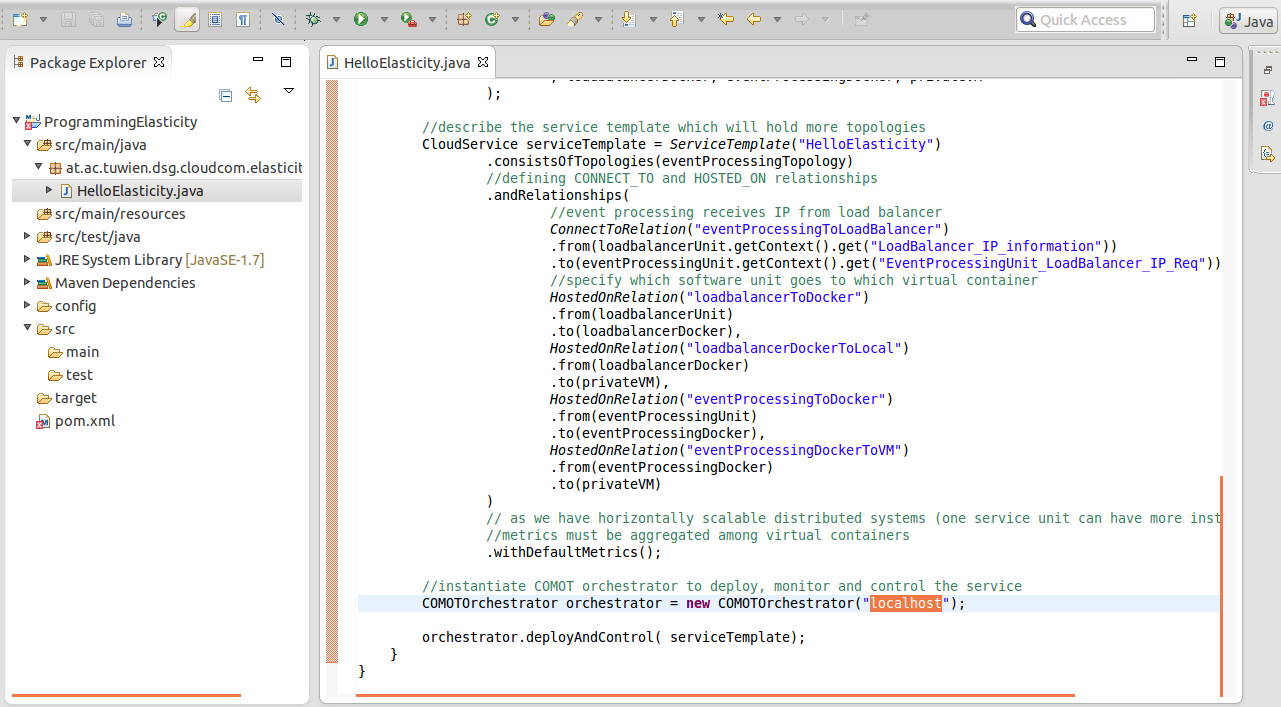

b. Run the programming elasticity example

Input the publicly accessible IP of COMOT (i.e., of the machine comot is deployed on) in ./src/at/ac/tuwien/dsg/cloudcom/elasticityDemo/HelloElasticity and run the provided example:

Expand for detailed explanation of the elasticity concepts captured by the provided example

-

First, we describe the required deployment stack, starting with mentioning Docker as a base

OperatingSystemUnit privateVM = OperatingSystemUnit("PersonalLaptop").providedBy(LocalDocker()); Next, we describe the docker containers which will hold our software

//Docker container for load balancer DockerUnit loadbalancerDocker = DockerUnit("LoadBalancerUnitDocker").providedBy(DockerDefault()); //Docker container for event processing DockerUnit eventProcessingDocker = DockerUnit("EventProcessingUnitDocker").providedBy(DockerDefault());We consider elasticity capabilities (i.e., what you can do with each unit) as first class citizens, which can have different properties. While in this case we use the default definitions of scaleOut and scaleIn, they could be more complex, such as specifying several primitives to be executed for each actions. For example, a scale out/in might imply "reconfigure load balancer", and then "de/allocate unit"

ElasticityCapability scaleOutEventProcessing = ElasticityCapability.ScaleOut(); ElasticityCapability scaleInEventProcessing = ElasticityCapability.ScaleIn();A service can contain different software artifacts used to deploy and run it. Thus, we specify the location of the software repository holding our artifacts, in this case our own repository.

String salsaRepo = "http://128.130.172.215/repository/files/HelloElasticity/";As deploying an elastic service can imply service-specific configuration, each software unit is deployed by an artifact:

ServiceUnit eventProcessingUnit = SingleSoftwareUnit("EventProcessingUnit") .deployedBy(SingleScriptArtifact(salsaRepo + "deployEventProcessing.sh")); ServiceUnit loadbalancerUnit = SingleSoftwareUnit("LoadBalancerUnit") .deployedBy(SingleScriptArtifact(salsaRepo + "deployLoadBalancer.sh"));To function, information about one service unit might be required by other units, such as for event processing to know the IP of the load balancer, to know where to register/deregister after an event processing instance is created/deallocated due to scaling actions

ServiceUnit loadbalancerUnit = SingleSoftwareUnit("LoadBalancerUnit") .exposes(Capability.Variable("LoadBalancer_IP_information")) ServiceUnit eventProcessingUnit = SingleSoftwareUnit("EventProcessingUnit") .requires(Requirement.Variable("EventProcessingUnit_LoadBalancer_IP_Req")) //event processing receives IP from load balancer ConnectToRelation("eventProcessingToLoadBalancer") .from(loadbalancerUnit.getContext().get("LoadBalancer_IP_information")) .to(eventProcessingUnit.getContext().get("EventProcessingUnit_LoadBalancer_IP_Req")),Each unit might support/have enabled different elasticity capabilities

ElasticityCapability scaleOutEventProcessing = ElasticityCapability.ScaleOut(); ElasticityCapability scaleInEventProcessing = ElasticityCapability.ScaleIn(); ServiceUnit eventProcessingUnit = SingleSoftwareUnit("EventProcessingUnit") .provides(scaleOutEventProcessing, scaleInEventProcessing)Depending on individual implementations, we might want to specify certain actions to be executed during different stages of a unit's life-cycle, from deployment, to service unit start, stop and undeployment. In our pilot service implementation, the event processing unit must be gracefully shut down before a scale in, as to deregister itself from the load balancer

ServiceUnit eventProcessingUnit = SingleSoftwareUnit("EventProcessingUnit") .withLifecycleAction(LifecyclePhase.STOP, BASHAction("sudo service event-processing stop"));To be elastic, units can have strategies or constraints associated, which will be used to control the units' runtime behavior. While in the following we focus on strategies, which are imperative commands specifying what elasticity capability to enforce when, we also support constraints, which only state the desired behavior, and the controller must find automatically the best capability to enforce. For the latter case additional information is required, such as effect of enforcing each capability.

ServiceUnit eventProcessingUnit = SingleSoftwareUnit("EventProcessingUnit") .controlledBy( //WHEN responseTime < 500 ms AND avgThroughput < 50 : SCALE IN Strategy("EP_ST1") .when(Constraint.MetricConstraint("EP_ST1_CO1", new Metric("responseTime", "ms")).lessThan("500")) .and(Constraint.MetricConstraint("EP_ST1_CO2", new Metric("avgThroughput", "#")).lessThan("50")) .enforce(scaleInEventProcessing)Individual units can be grupped in higher level constructus, called topologies

ServiceTopology eventProcessingTopology = ServiceTopology("EventProcessingTopology") .withServiceUnits(loadbalancerUnit, eventProcessingUnit //add vm types to topology , loadbalancerDocker, eventProcessingDocker, privateVM );The deployment order must be specified, to understand what is hosted on what

//load balancer software unit resides in its own docker container HostedOnRelation("loadbalancerToDocker") .from(loadbalancerUnit) .to(loadbalancerDocker)

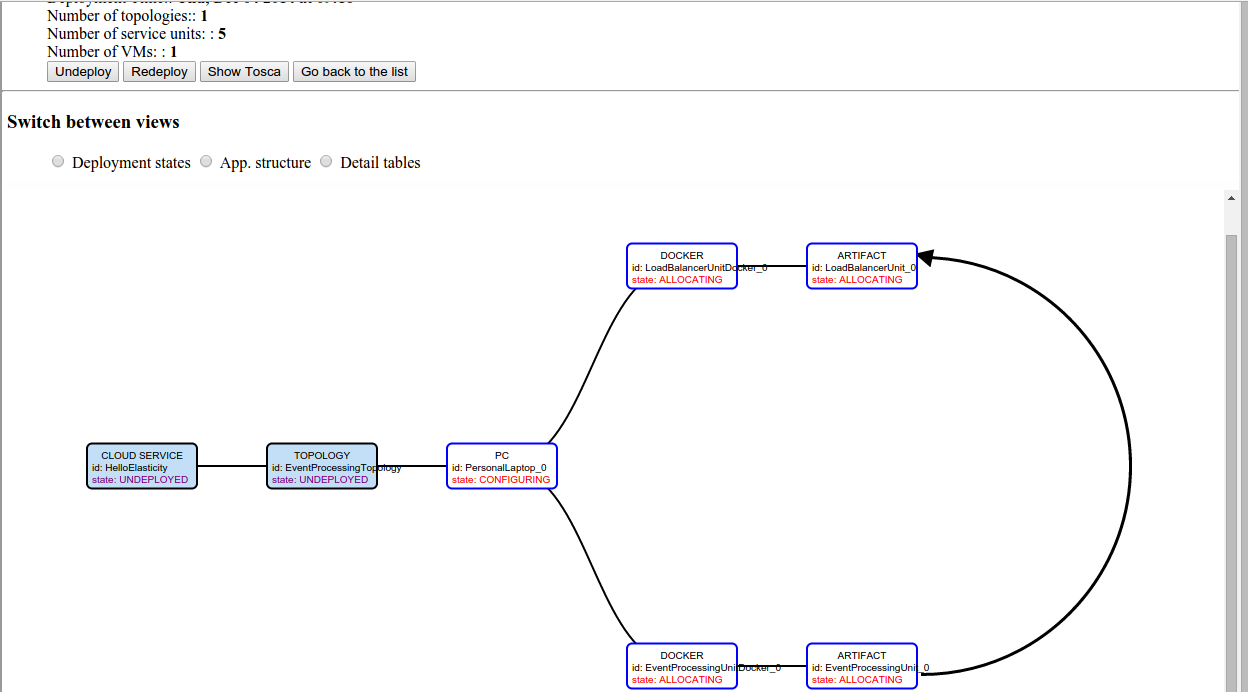

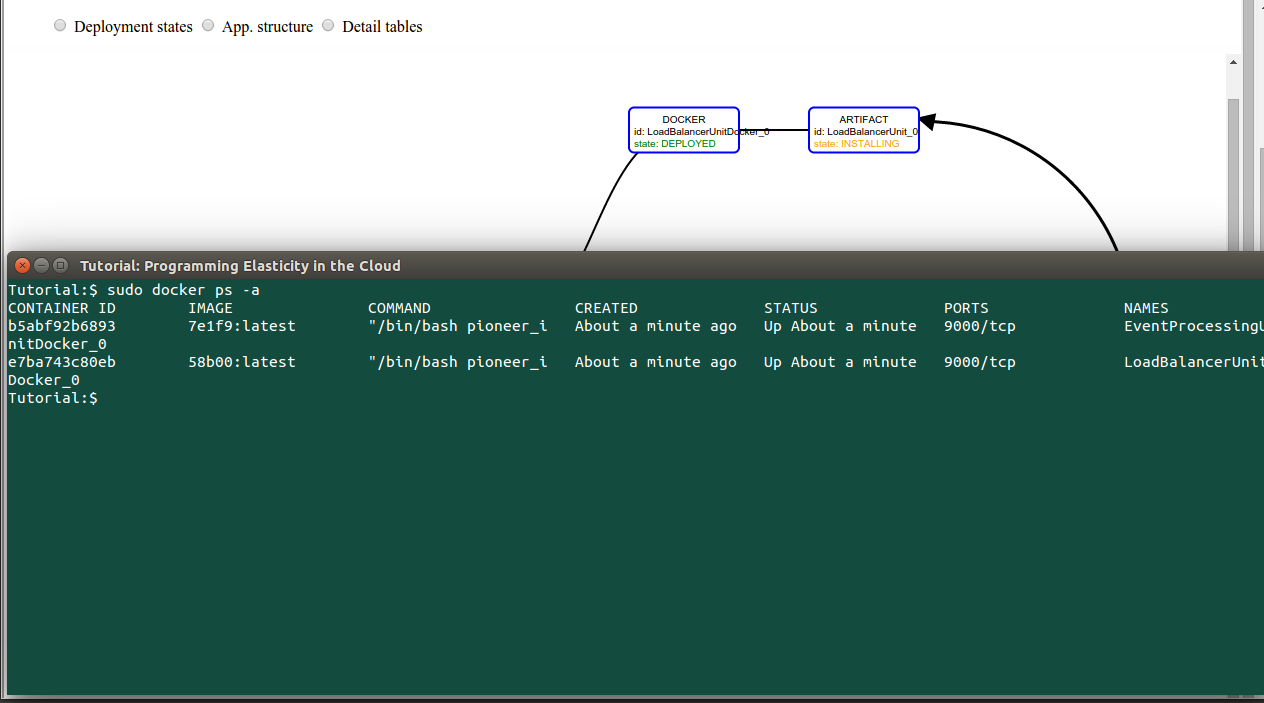

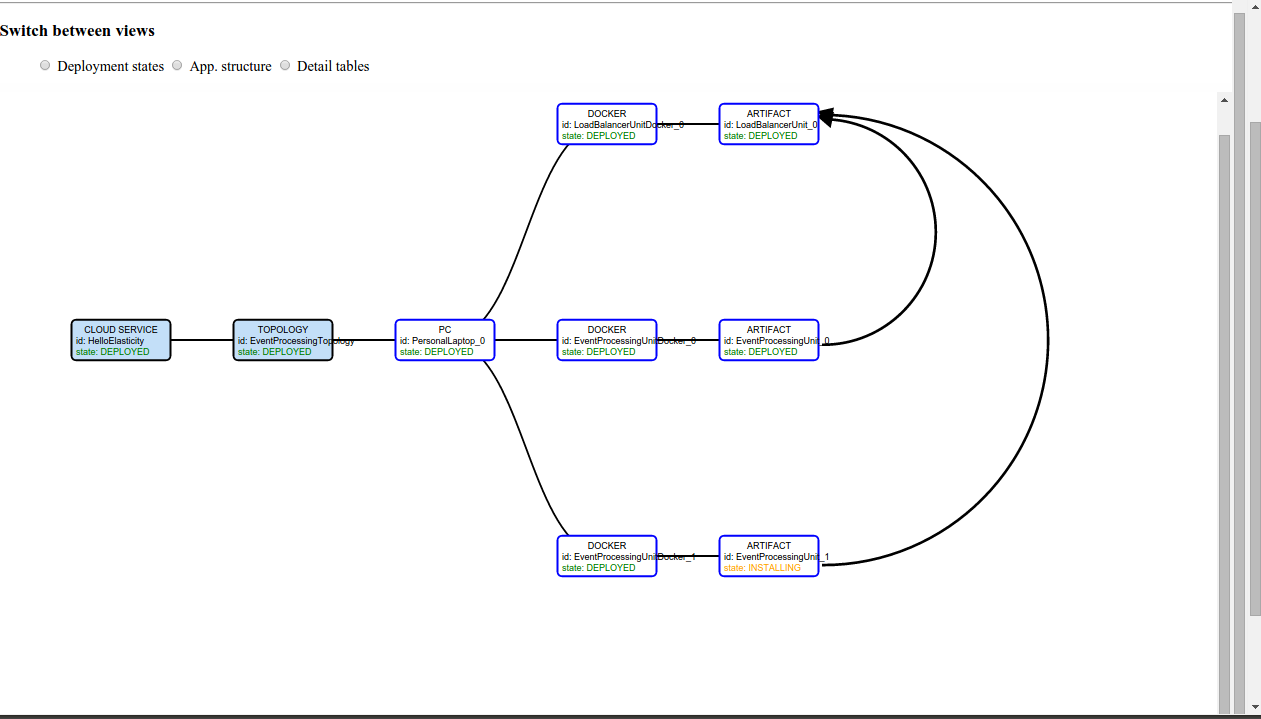

2. Visualize service deployment progress

Open COMOT Web and select the COMOT Web UI Deployment and Management Tab

3. Connect (trough ssh) to machine hosting COMOT

In the current example, the service is be deployed using docker containers, which can be listed by running:

# docker ps -a

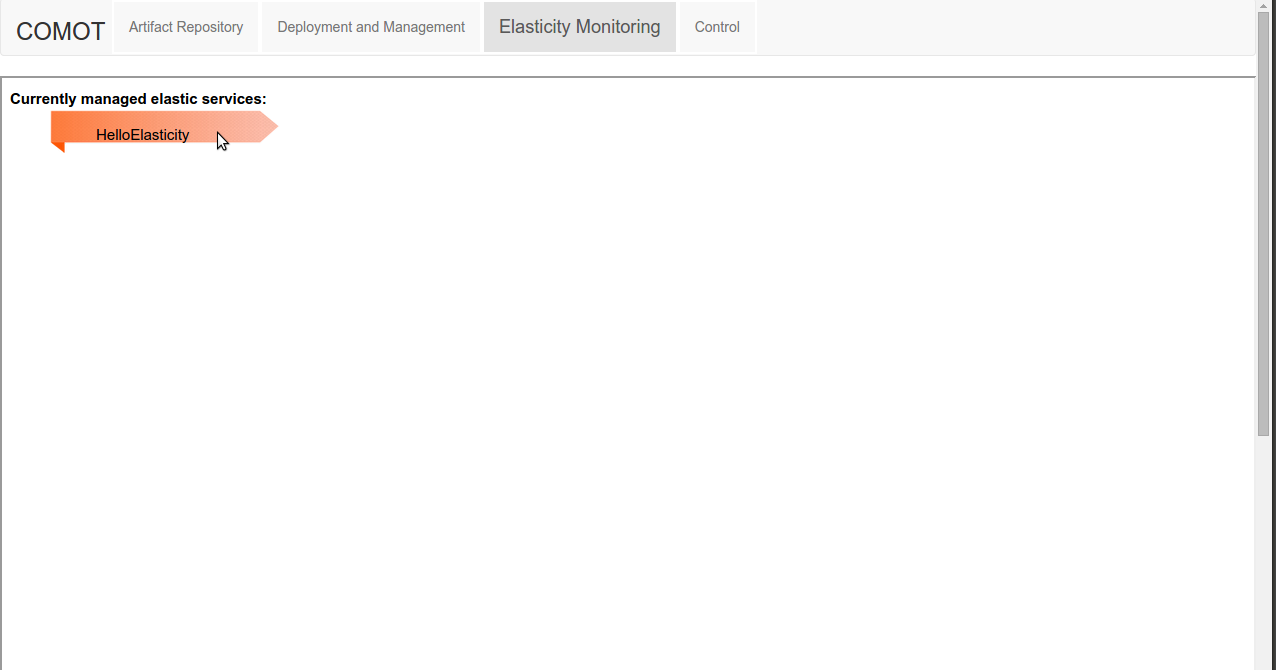

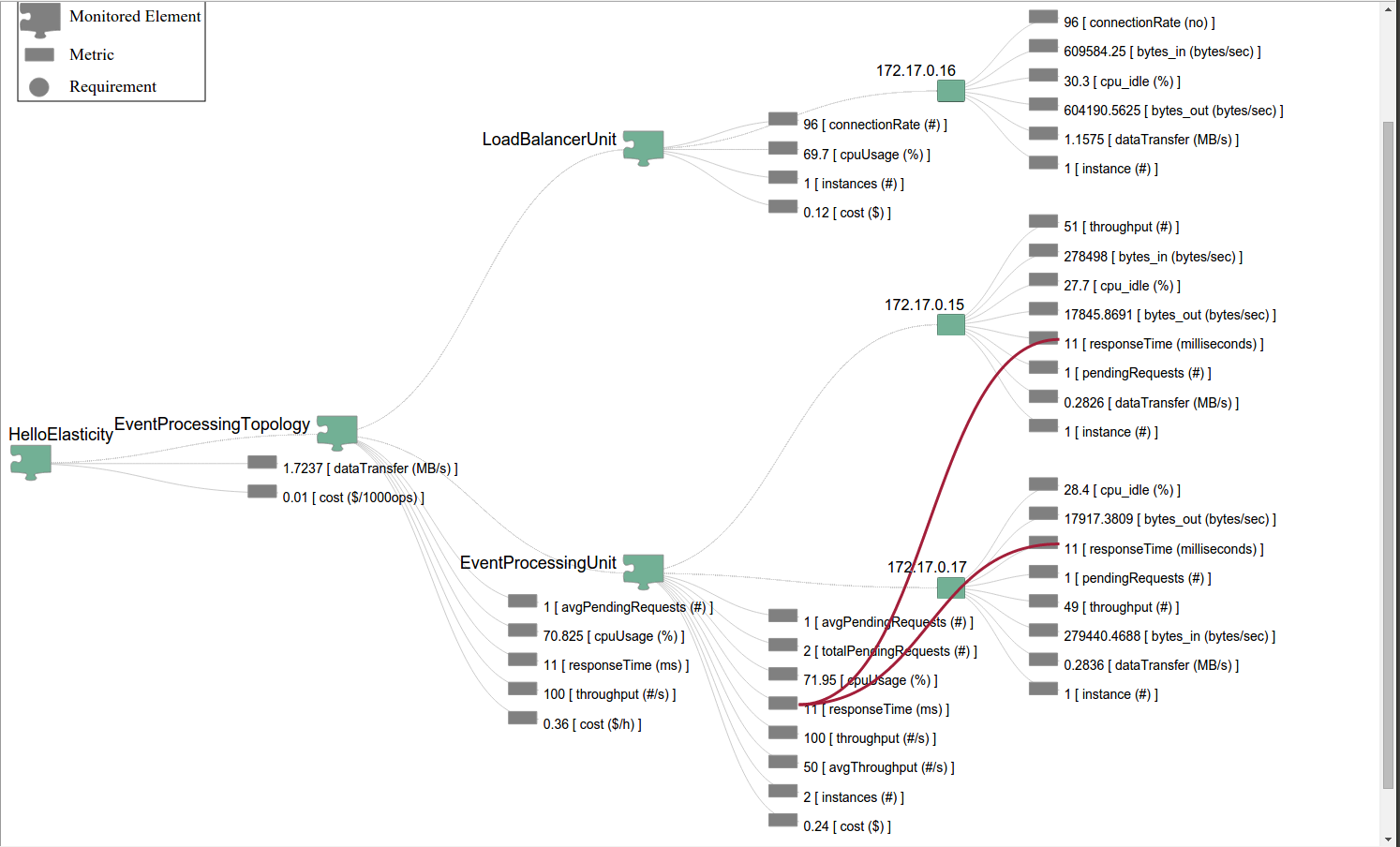

4. Visualize service monitoring information

- Open COMOT Web and select the Elasticity Monitoring tab

- Select the Hello Elasticity service from the monitored services list

A hierarchical monitoring view will open, showing the elastic service, containing the specified topology, in turn made of units, which run on separate instances of docker containers (marked by their distinct IPs)

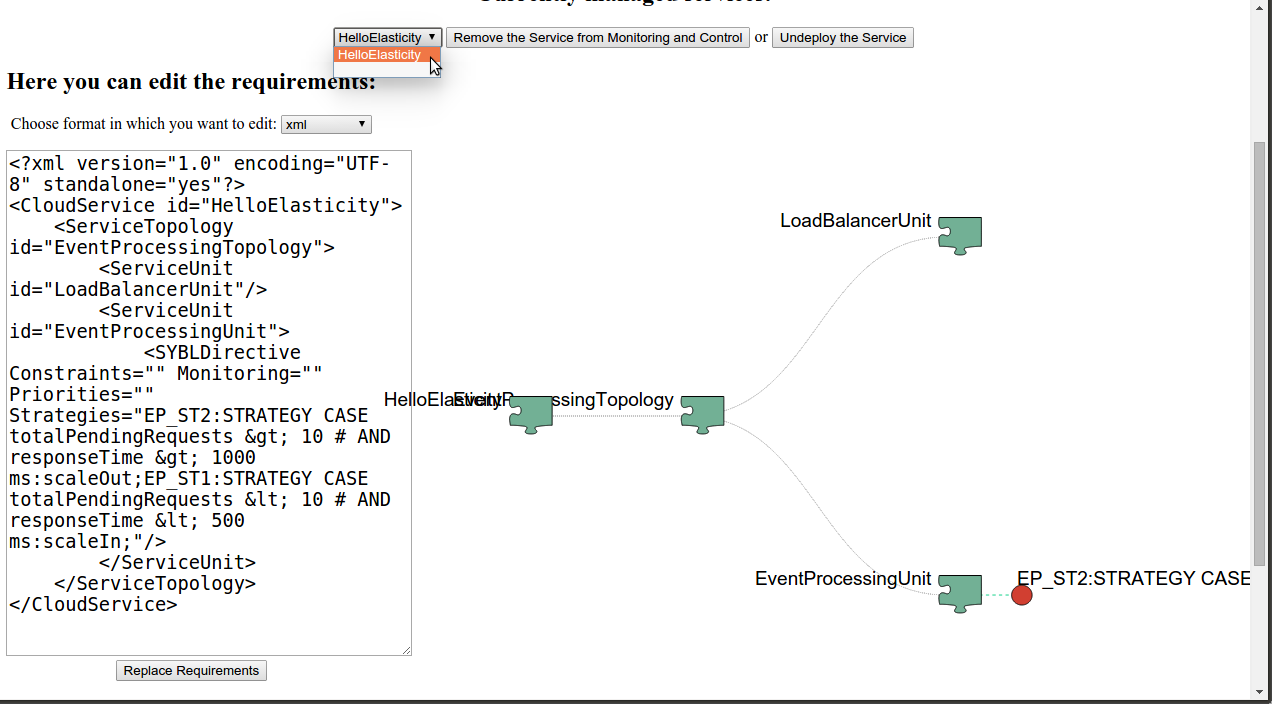

4. Visualize service elasticity requirements

- Open COMOT Web and select the Control tab

- Select the Hello Elasticity service from the monitored services drop-down list

If required, a user can change the supplied elasticity requirements during run-time, using one of the supported editing formats.

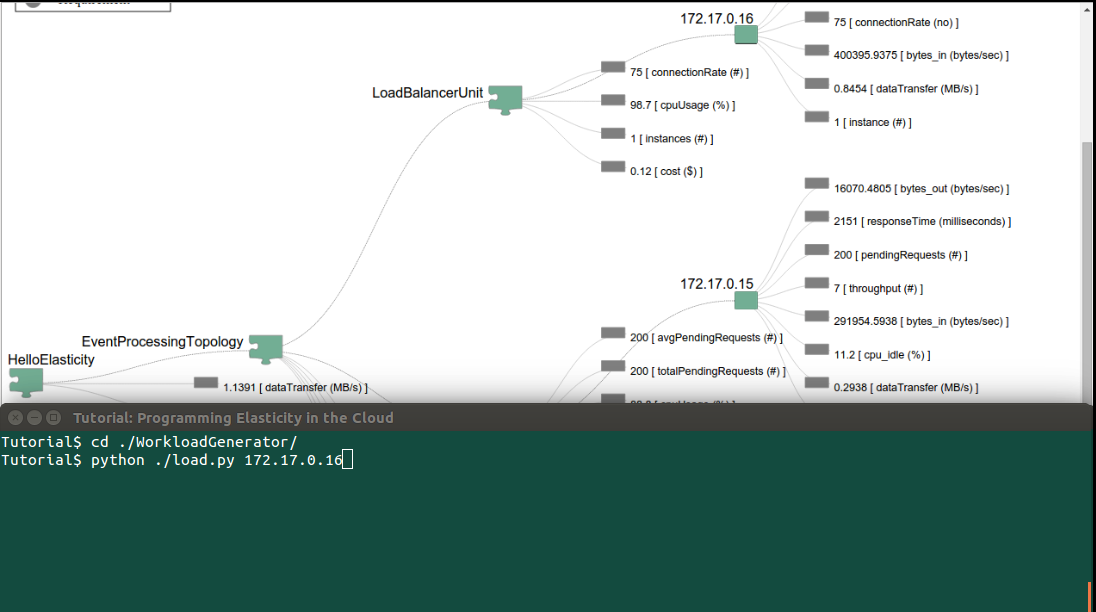

5. Simulate service workload

- Open a terminal to the COMOT machine, and from the folder in which COMOT was deployed

- Run

, by replacing LOAD_BALANCER_IP with the IP of the single instance of the LoadBalancerUnit reported in MELA$ cd ./WorkloadGenerator $ python ./load.py LOAD_BALANCER_IP

Note: If the default values of the load script are not enough to trigger scaling actions, the script takes four additional arguments: [minimum # of requests], [maximum # of requests], [number of iterations with same # of requests ], [requests change value].

If needed, please use all load parameters as in the example below:

$ python ./load.py LOAD_BALANCER_IP 300 500 5 1 #will issue 5 times 300 requests, then 5 times 301, and so on, until 500, from which it will decrease 5. Observe elasticity actions

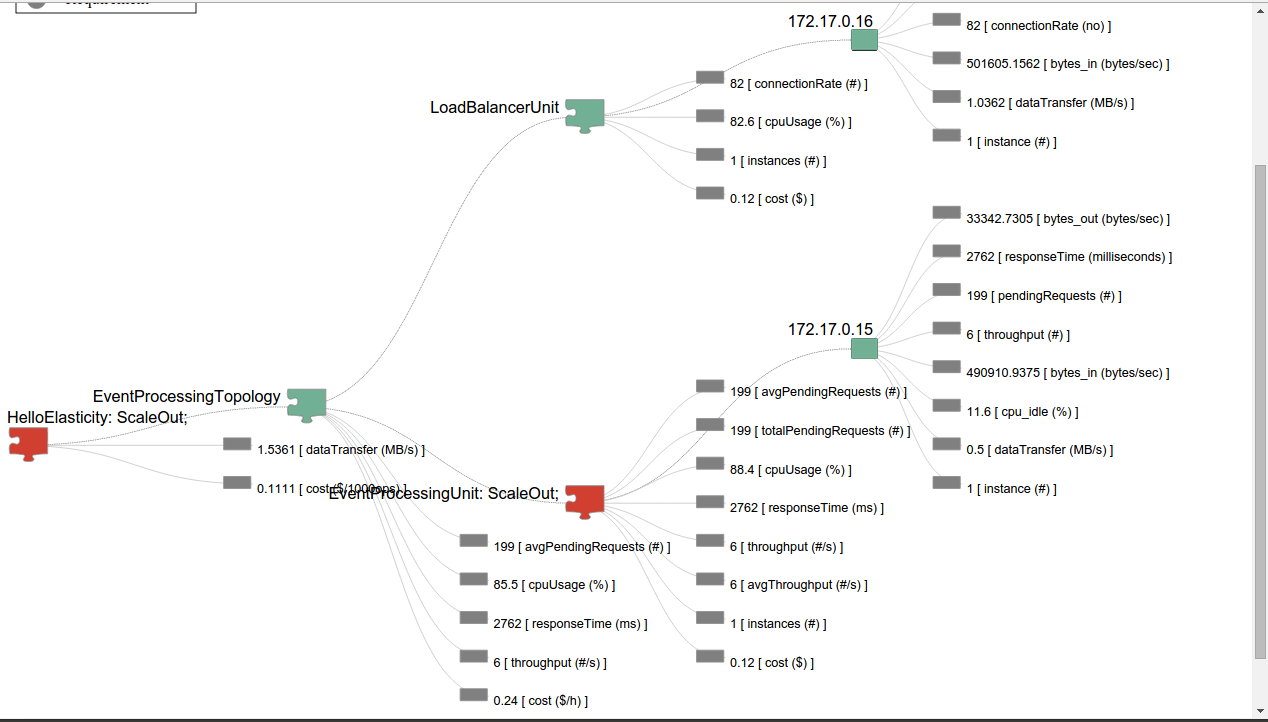

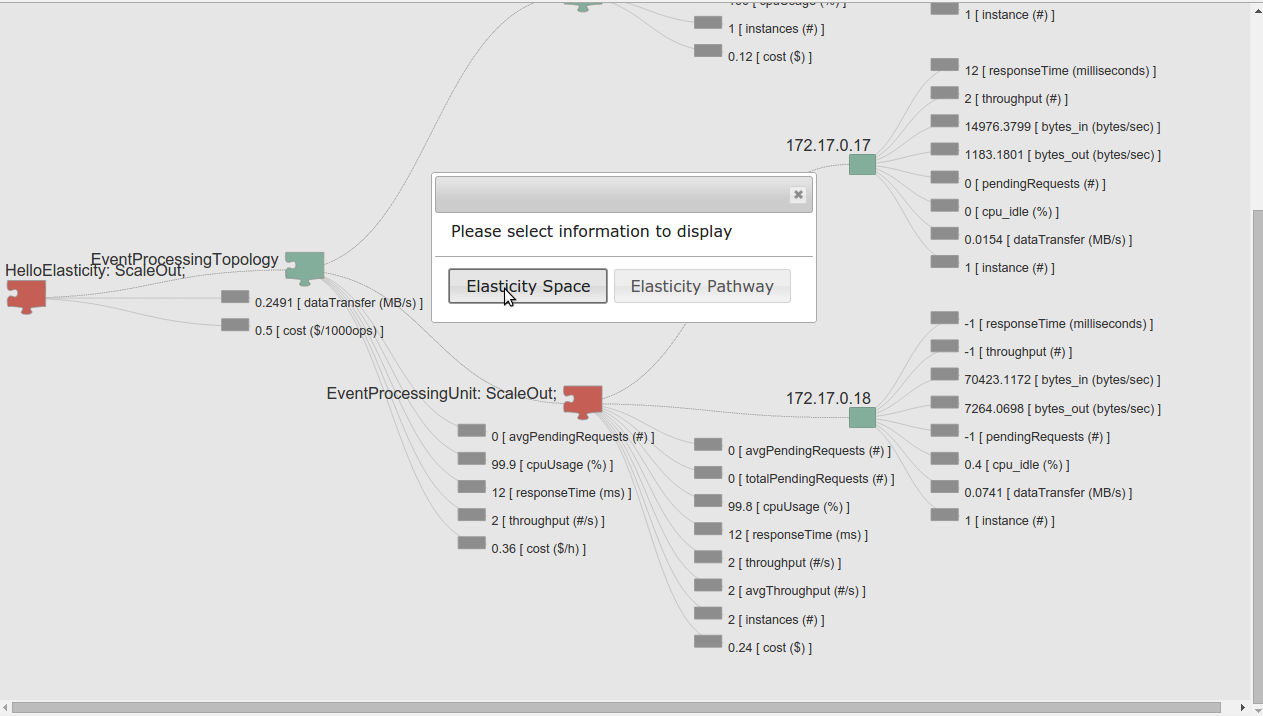

The load will lead to the specified requirements on responseTime < 1 s and totalPendingRequests < 10 to be violated, leading to scale out actions

The action progress can be monitored in COMOT web in the Deployment and Management tab

6. Visualize metric composition and structuring after scale out

In general, elastic cloud sevices can re-configure and scale individual units or the whole service at run-time, due to various elasticity requirements. As unit instances running in virtual containers such as virtual machines, or Docker are created/destroyed dynamically at run-time, if monitoring information is associated only with each virtual machine, it will be lost during scale-in operations which destroy the containers.

Thus, we use metric composition to associate monitoring information with the service structure, and select only the metrics we consider important for the elasticity of the service

To see how metric are aggregated in this example, click or double click on the gray icon near any metric. The need to aggregate metrics is more visible when more instances of the Event Processing Unit are running.

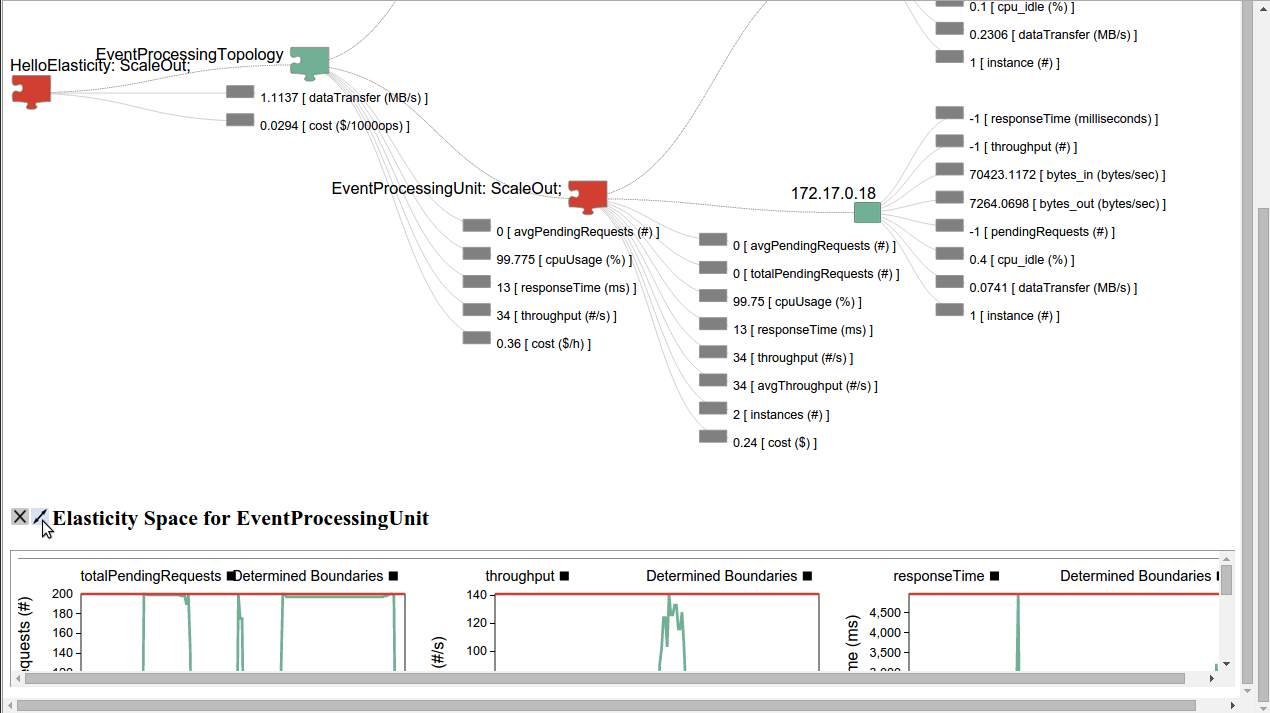

7. Visualize service Elasticity Space

In the Elasticity Monitoring tab, click on the component icon below the Event Processing Unit name, and select Elasticity Space from the dialog.

Increase the size of the space window by clicking on the maximize button, and investigate the historical monitoring information recorded for each of the selected metrics for the particular unit

Tutorial flow video

Publications

Please find below relevant publications with respect to rSYBL, MELA and SALSA

rSYBL

- Georgiana Copil, Demetris Trihinas, Hong-Linh Truong,Daniel Moldovan, George Pallis, Schahram Dustdar, Marios Dikaiakos, "ADVISE - a Framework for Evaluating Cloud Service Elasticity Behavior", the 12th International Conference on Service Oriented Computing. Paris, France, 3-6 November, 2014. BEST PAPER AWARD, http://link.springer.com/chapter/10.1007/978-3-662-45391-9_19, ppt

- Georgiana Copil, Daniel Moldovan, Hong-Linh Truong, Schahram Dustdar, (Short Paper), "Multi-Level Elasticity Control of Cloud Services", the 11th International Conference on Service Oriented Computing. Berlin, Germany, 2-5 December, 2013 http://dx.doi.org/10.1007/978-3-642-45005-1_31.

- Georgiana Copil, Daniel Moldovan, Hong-Linh Truong, Schahram Dustdar, "SYBL: an Extensible Language for Controlling Elasticity in Cloud Applications",13th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGrid), May 14-16, 2013, Delft, the Netherlands, http://dx.doi.org/10.1109/CCGrid.2013.42, ppt

MELA

- Daniel Moldovan, Georgiana Copil, Hong-Linh Truong, Schahram Dustdar, "On Analyzing Elasticity Relationships of Cloud Services", 6th International Conference on Cloud Computing Technology and Science, 15-18 December 2014, Singapore, "accepted"

- Daniel Moldovan, Georgiana Copil, Hong-Linh Truong, Schahram Dustdar, "MELA: Elasticity Analytics for Cloud Services", International Journal of Big Data Intelligence, Special issue for publishing IEEE CloudCom 2013 selected papers

- Daniel Moldovan, Georgiana Copil, Hong-Linh Truong, Schahram Dustdar, "MELA: Monitoring and Analyzing Elasticity of Cloud Services", 5'th International Conference on Cloud Computing, CloudCom, Bristol, UK, 2-5 December, 2013, http://dx.doi.org/10.1109/CloudCom.2013.18, ppt

SALSA

- Duc-Hung Le, Hong-Linh Truong, Georgiana Copil, Stefan Nastic and Schahram Dustdar, "SALSA: a Framework for Dynamic Configuration of Cloud Services", 6th International Conference on Cloud Computing Technology and Science, 15-18 December 2014, Singapore, "accepted"