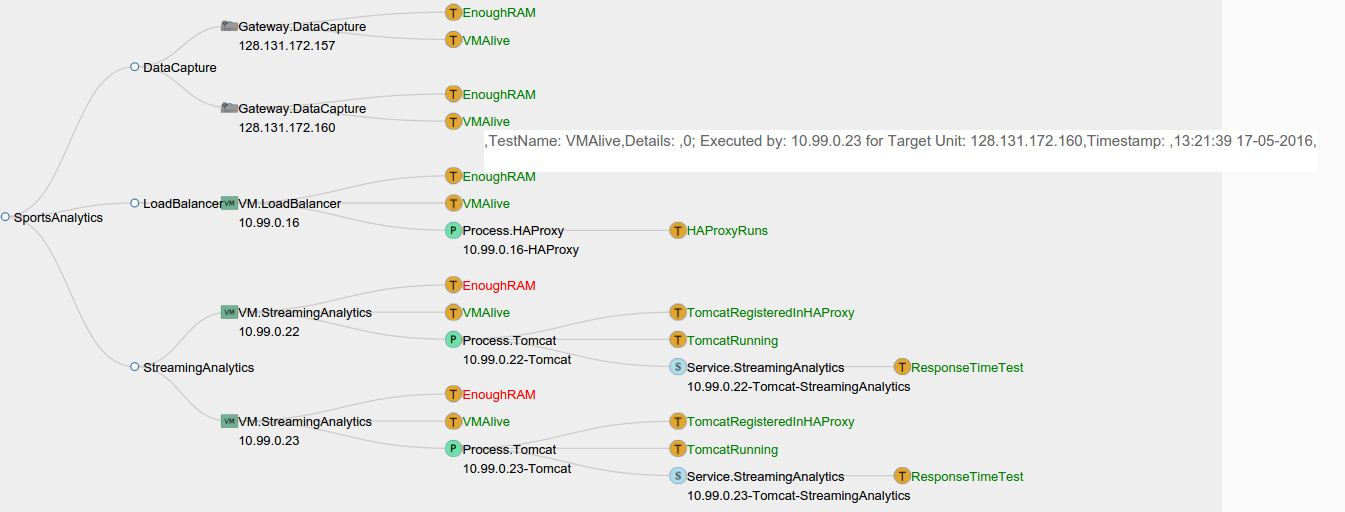

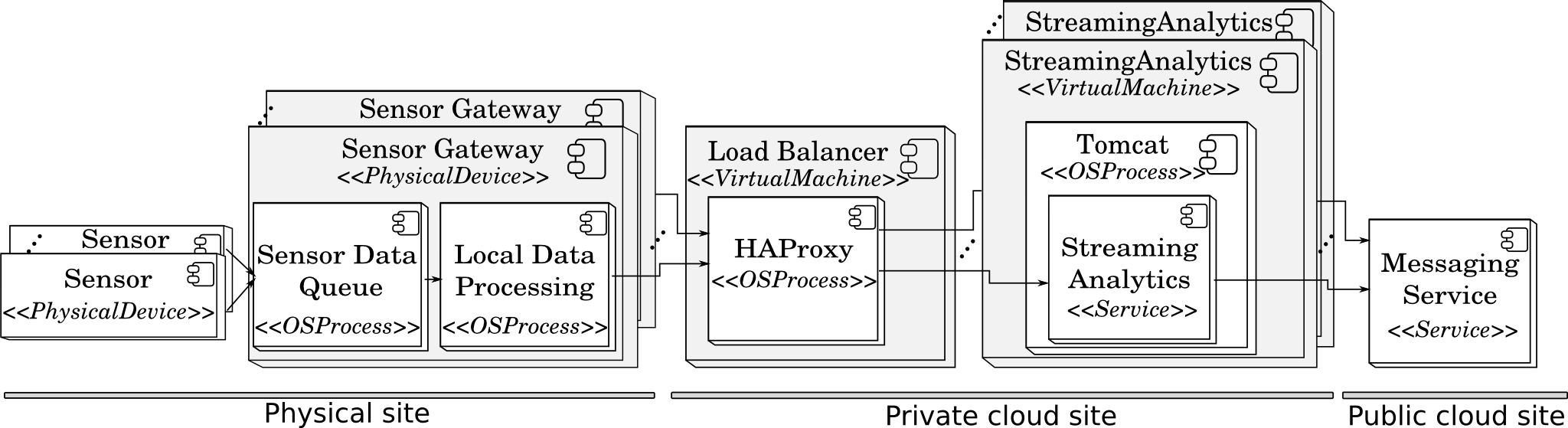

Example of Elastic Cyber-Physical System (eCPS) for analysis of streaming data

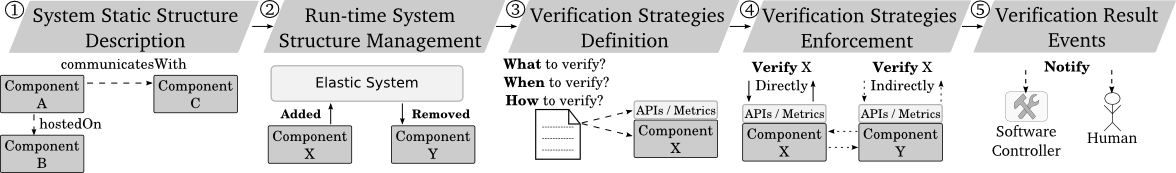

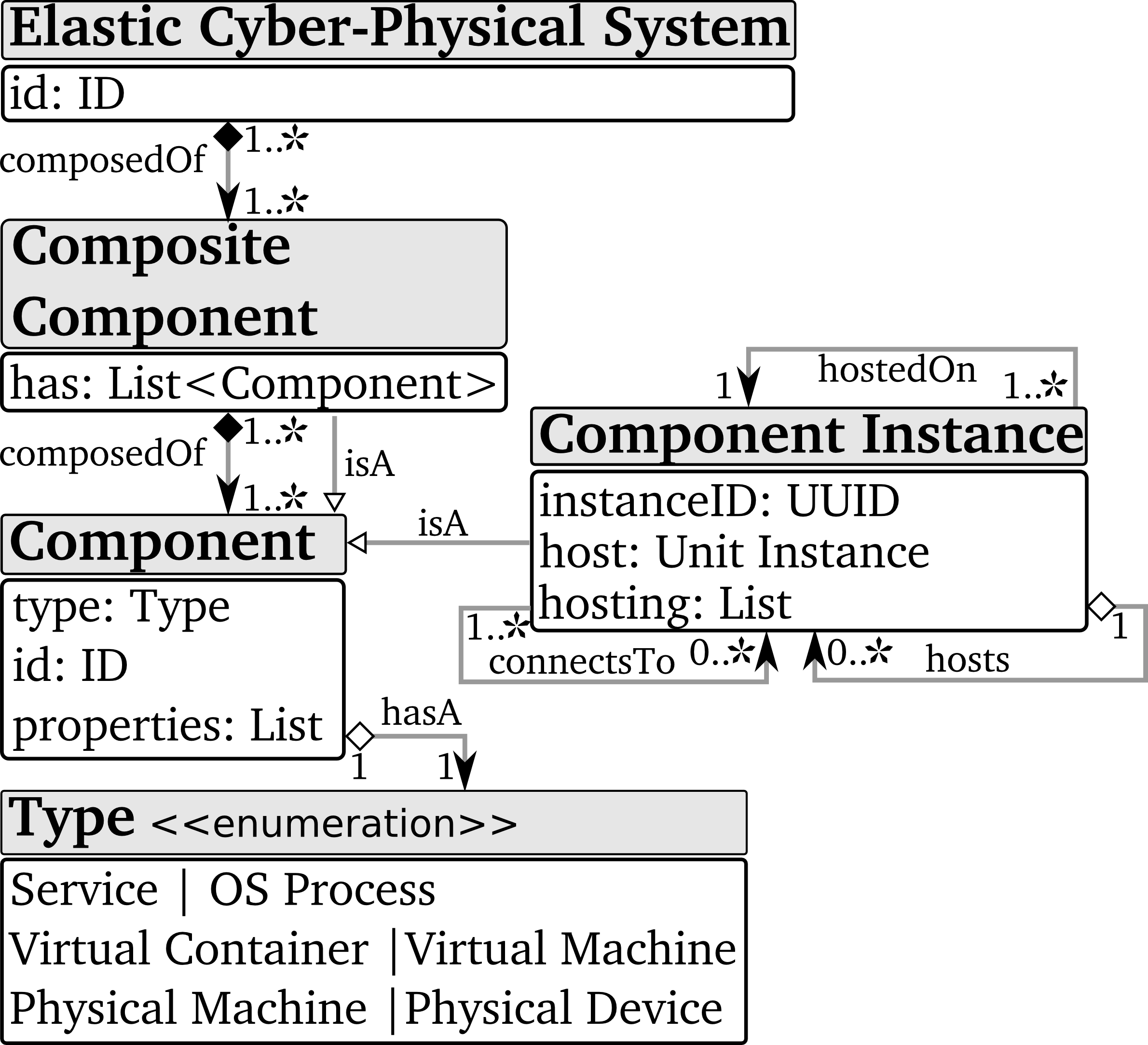

A Cyber-physical System (CPS) is a system which has components deployed both in the physical world (e.g., industrial machines, smart buildings), and in computing environments (e.g., data centers, cloud infrastructures). For example, a smart factory could be considered as a CPS having components: (i) inside assembly robots, (ii) inside sensor gateways deployed in the factory to collect environmental conditions, and (iii) deployed in a private data-center to analyze data collected from robots and sensor gateways. An Elastic Cyber-physical Systems (eCPS) can further add/remove components at run-time, from computing resources to physical devices. Elasticity enables eCPSs to align their costs, quality, and resource usage to load and owner requirements.

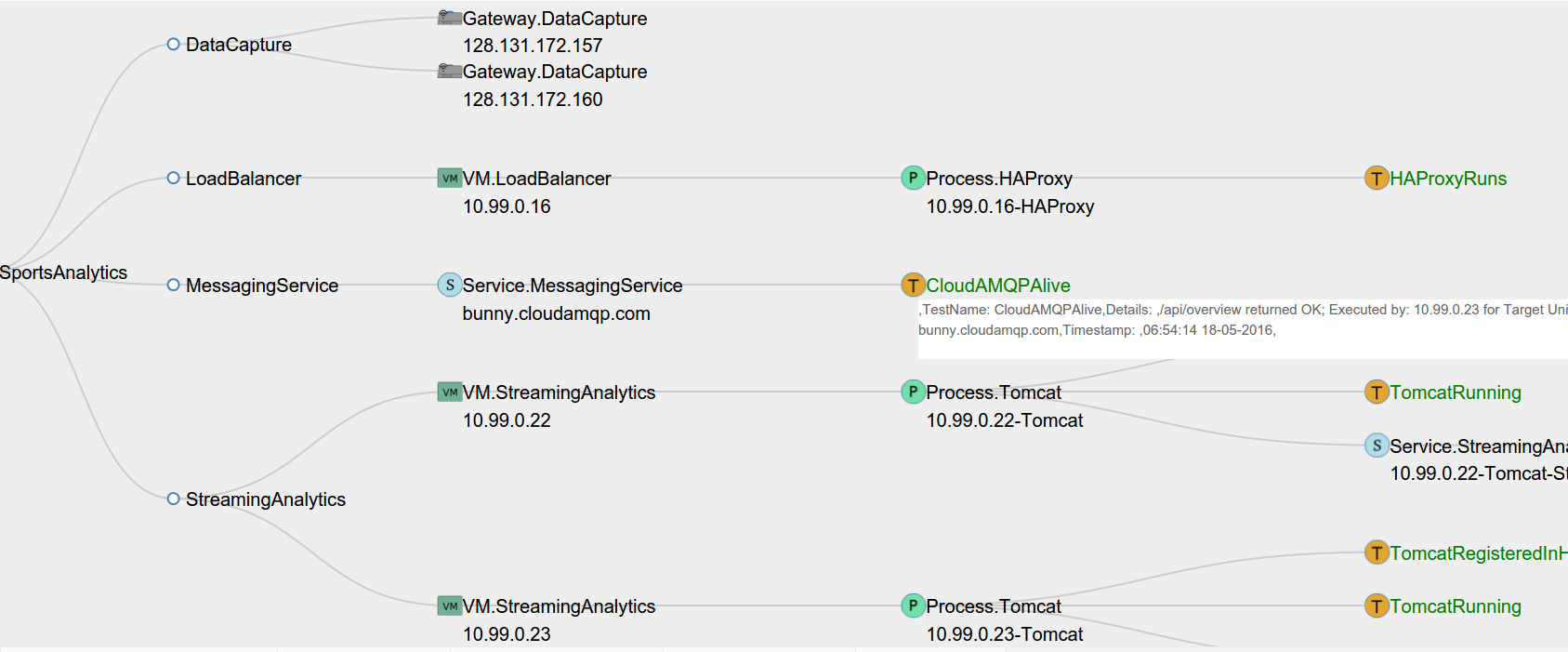

The owner of a smart factory builds an elastic cyber-physical system (eCPS) for analysis of streaming data coming from the factory's industrial robots and environmental sensors. The system can scale to adapt to changes in load or factory requirements by adding and removing both physical and cyber components. Factory sensors robots send data to physical devices called Sensor Gateways. The gateways perform local data processing and sends the data through a HAProxy HTTP Load Balancer to Streaming Analytics services hosted in virtual machines in a Private Cloud. The Streaming Analytics service is deployed as a software artifact in a Tomcat web server. Selected analytics results are published to interested parties through a third-party Messaging Service offered as it is by a Public Cloud provider.

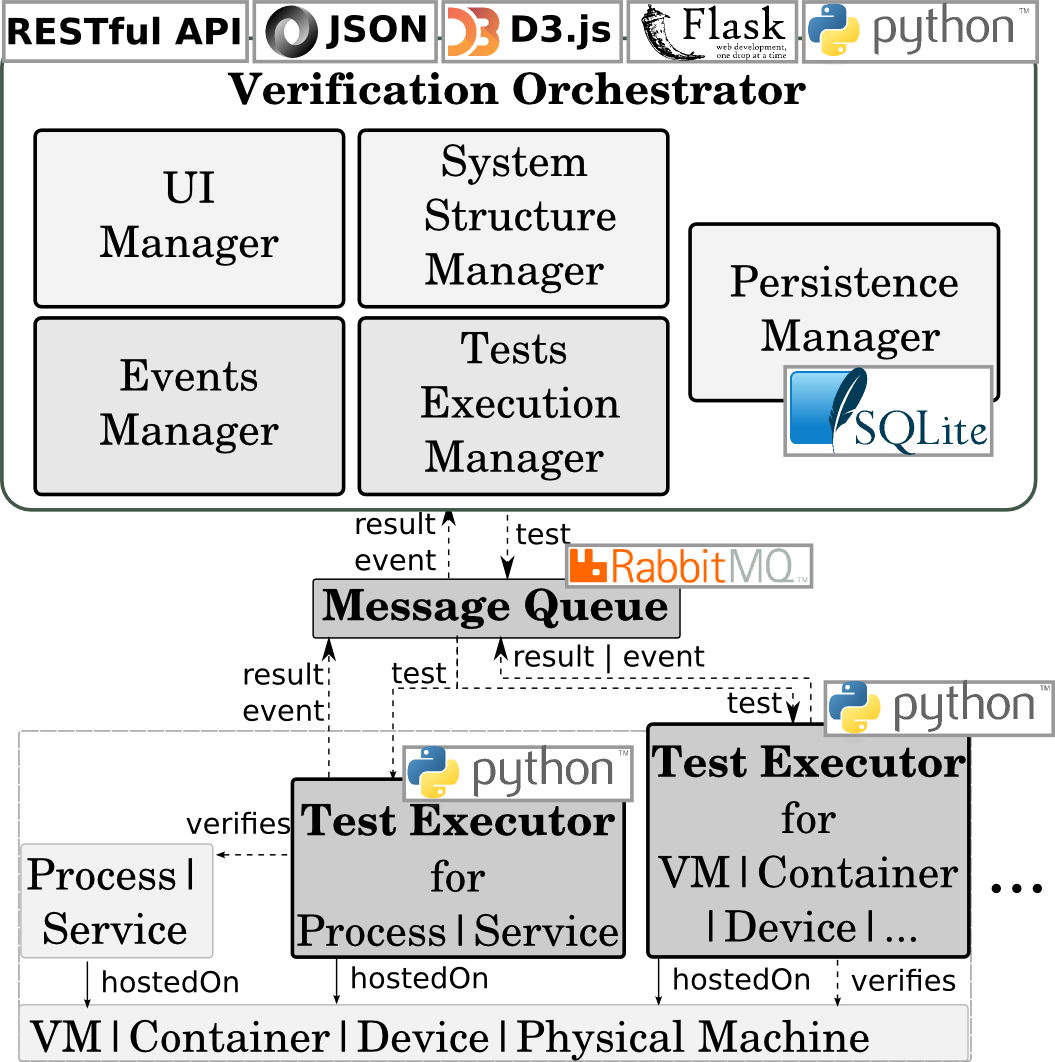

The smart factory owner wants to ensure that the system is healthy and operates within specified parameters, especially after scaling actions which add/remove components. I.e., the system is correctly configured, its components are deployed and running, and provides expected performance.